Enterprise Documentation

Rate Limiting the API Gateway (Redis backed)

Document updated on Feb 2, 2023

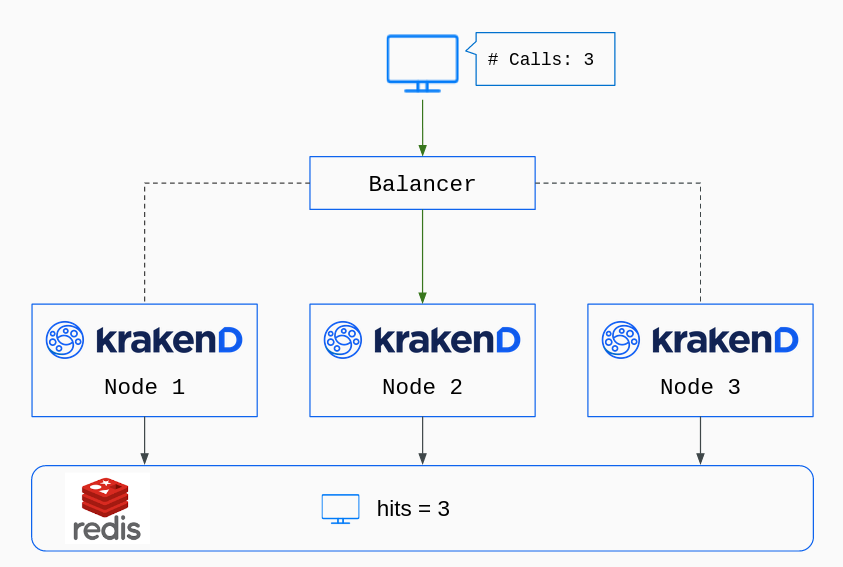

The global rate limit functionality enables a Redis database store to centralize all KrakenD node counters. Instead of having each KrakenD node count its hits, the counters are global and stored in the database.

Default rate limit (stateless) vs. Global rate limit (stateful)

It’s essential to understand the differences between these two antagonistic approaches, so let’s put an example.

Let’s say you have four different KrakenD nodes running in a cluster, and you want to limit a specific set of users to 100 requests per second.

With the default rate limit, every KrakenD node only knows about itself. Whatever is happening in the rest of the nodes is not visible to itself. Since you have four KrakenD boxes, you need to write in the configuration a limit of 25 reqs/s. When all nodes are running simultaneously and balanced equally, you get the average limit of 100 reqs/s. Users can see rejections when the system is running to almost the total capacity of the configured limit. Some nodes will have reached their limit already, while others still have a margin. If you ever deploy a 5th machine with the same configuration, your total rate-limit upgrades to 125 reqs/s. You are adding 25reqs/s more capacity with the new node. But if you remove one of the four nodes, your total rate limit would be 75 reqs/s.

The global rate limit, on the other hand, makes the nodes aware of what is happening with their neighbors. Awareness comes from the fact that all KrakenD nodes read and write the counters on the central database (Redis), knowing the real number of hits on the whole platform. The limits are invariable if you add or remove nodes on the fly.

From a business perspective, the Global rate limit might sound more attractive. In contrast, from the technical point of view, the default rate limit is much better as it offers infinite scalability and much higher throughput.

Our recommendation (an engineer writing) is always to use the default rate limit.

Configuration

To enable the global rate limit on KrakenD EE, you need to configure the redis-ratelimit server plugin as shown below:

{

"$schema": "https://www.krakend.io/schema/v2.6/krakend.json",

"version": 3,

"plugin": {

"pattern": ".so",

"folder": "/opt/krakend/plugins/"

},

"extra_config": {

"plugin/http-server": {

"name": [

"redis-ratelimit",

"some-other-plugin-here"

],

"redis-ratelimit": {

"host": "redis:6379",

"tokenizer": "jwt",

"tokenizer_field": "sub",

"burst": 10,

"rate": 10,

"period": "60s"

}

}

}

}

Notes: The key "some-other-plugin-here" above demonstrates how to load more than one router plugin.

The limits apply to the user globally to all endpoints.

The configuration options are described below:

Fields of Redis ratelimit

| How many requests a client can make above the rate specified during a peak. |

| The URL to the Redis instance that stores the counters using the format host:port.Examples: "redis" , "redis:6379" |

| For how long the content lives in the cache. Usually in seconds, minutes, or hours. E.g., use 120s or 2m for two minutesSpecify units using ns (nanoseconds), us or µs (microseconds), ms (milliseconds), s (seconds), m (minutes), or h (hours). |

| Number of allowed requests during the observed period. |

| One of the preselected strategies to rate-limit users. Possible values are: "jwt" , "ip" , "url" , "path" , "header" , "param" , "cookie" |

| The field used to set a custom field for the tokenizer (e.g., extracting the token from a custom header other than Authorization or using a claim from a JWT other than the jti). |

The different tokenizer strategies answer the question of What is for you a “user”? Valid values are:

jwt: When the user is authorized using JWT token tokens, thejtiis used as a unique identifier.ip: Every different IP is considered a new userurl: The whole requested URL is hashed as the identifierpath: Rate-limit users by the requested pathheader: A custom header identifies the uniqueness of the userparam: A parameter in the request contains the identifier on which to limitcookie: Cookie content defines the user.