Document updated on Dec 12, 2024

Stateful endpoint rate limit (Redis backed)

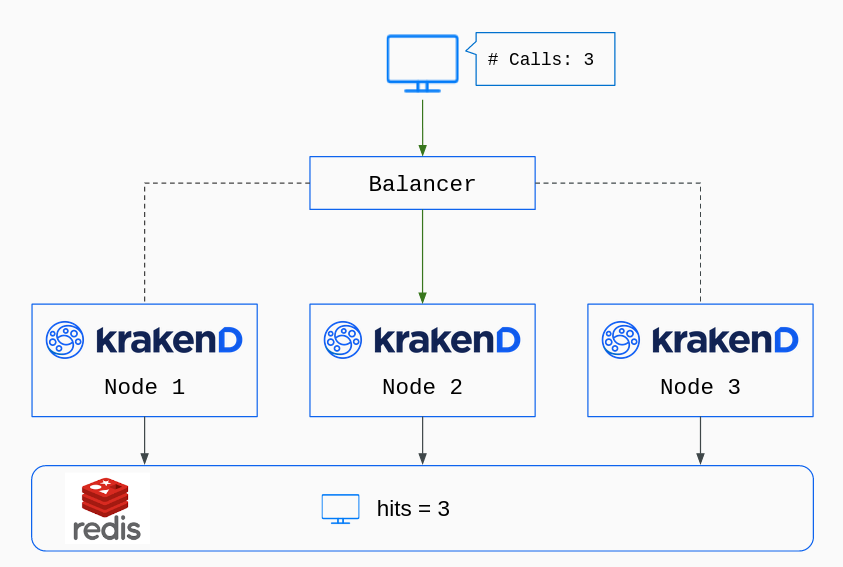

The Stateful Endpoint Rate Limit based on Redis centralizes the activity of all KrakenD instances rate limiting. It works identically to the stateful service rate limit but applies the limits to specific endpoints instead of the whole service.

You should always use the stateless rate limit unless an unavoidable business rule forces you to choose this one. The stateless rate limit does not need any database and does not generate network activity to manage the token bucket. From a functional perspective, both rate limits, stateless and stateful, offer the same features. The difference lies in the fact that in the stateless, each node counts on its traffic, while the stateful counts all traffic. But don’t be misled; with a stateless rate limit, you can achieve the same results without adding a network dependency, even when you scale up and down your infrastructure.

Redis rate-limit endpoint configuration

To configure Redis-backed rate limits on your endpoints, you must declare at least two namespaces. One with the Redis configuration at the service level (redis namespace), and another that sets the rate limit values in the desired endpoint’s extra_config with the namespace qos/ratelimit/router/redis.

The following configuration enables 10 requests per minute to the /foo endpoint, based on the identity of the sub claim present in the JWT token:

{

"$schema": "https://www.krakend.io/schema/v2.10/krakend.json",

"version": 3,

"extra_config": {

"redis": {

"connection_pools": [

{

"name": "shared_instance",

"address": "redis:6379"

}

]

},

},

"endpoints": [

{

"endpoint": "/foo",

"extra_config": {

"qos/ratelimit/router/redis": {

"connection_name": "shared_instance",

"on_failure_allow": false,

"client_max_rate": 10,

"client_capacity": 10,

"every": "1m",

"strategy": "jwt",

"key": "sub"

}

}

}

]

}

The redis namespace allows you to set a lot of Redis pool options. The two basic ones are the name and the address as shown above. Then, the namespace qos/ratelimit/router/redis defines how the endpoint Rate Limit will work in this endpoint. The properties you can set in this namespace are the same as you find in the stateful service rate limit:

Fields of "qos/ratelimit/redis"

Minimum configuration needs any of:

connection_pool

+

max_rate

, or

connection_pool

+

client_max_rate

, or

connection_name

+

max_rate

, or

connection_name

+

client_max_rate

capacityinteger- Defines the maximum number of tokens a bucket can hold, or said otherwise, how many requests will you accept from all users together at any given instant. When the gateway starts, the bucket is full. As requests from users come, the remaining tokens in the bucket decrease. At the same time, the

max_raterefills the bucket at the desired rate until its maximum capacity is reached. The default value for thecapacityis themax_ratevalue expressed in seconds or 1 for smaller fractions. When unsure, use the same number asmax_rate.Defaults to1 client_capacityinteger- Defines the maximum number of tokens a bucket can hold, or said otherwise, how many requests will you accept from each individual user at any given instant. Works just as

capacity, but instead of having one bucket for all users, keeps a counter for every connected client and endpoint, and refills fromclient_max_rateinstead ofmax_rate. The client is recognized using thestrategyfield (an IP address, a token, a header, etc.). The default value for theclient_capacityis theclient_max_ratevalue expressed in seconds or 1 for smaller fractions. When unsure, use the same number asclient_max_rate.Defaults to1 client_max_ratenumber- Number of tokens you add to the Token Bucket for each individual user (user quota) in the time interval you want (

every). The remaining tokens in the bucket are the requests a specific user can do. It keeps a counter for every client and endpoint. Keep in mind that every KrakenD instance keeps its counters in memory for every single client. connection_namestring- The connection pool name or cluster name that is used by this ratelimit. The value must match what you configured in the Redis Connection Pool

connection_poolstring Deprecated- The connection pool name that is used by this ratelimit. The value must match what you configured in the Redis Connection Pool

everystring- Time period in which the maximum rates operate. For instance, if you set an

everyof10mand a rate of5, you are allowing 5 requests every ten minutes.Specify units usingns(nanoseconds),usorµs(microseconds),ms(milliseconds),s(seconds),m(minutes), orh(hours).Defaults to"1s" keystring- Available when using

client_max_rateand you have set astrategyequal toheaderorparam. It makes no sense in other contexts. Forheaderit is the header name containing the user identification (e.g.,Authorizationon tokens, orX-Original-Forwarded-Forfor IPs). When they contain a list of space-separated IPs, it will take the IP from the client that hit the first trusted proxy. Forparamit is the name of the placeholder used in the endpoint, likeid_userfor an endpoint/user/{id_user}.Examples:"X-Tenant","Authorization","id_user" max_ratenumber- Sets the maximum number of requests all users can do in the given time frame. Internally uses the Token Bucket algorithm. The absence of

max_ratein the configuration or a0is the equivalent to no limitation. You can use decimals if needed. on_failure_allowboolean- Whether you want to allow a request to continue when the Redis connection is failing or not. The default behavior blocks the request if Redis is not responding correctlyDefaults to

false strategy- Available when using

client_max_rate. Sets the strategy you will use to set client counters. Chooseipwhen the restrictions apply to the client’s IP address, or set it toheaderwhen there is a header that identifies a user uniquely. That header must be defined with thekeyentry.Possible values are:"ip","header","param"